Introduction

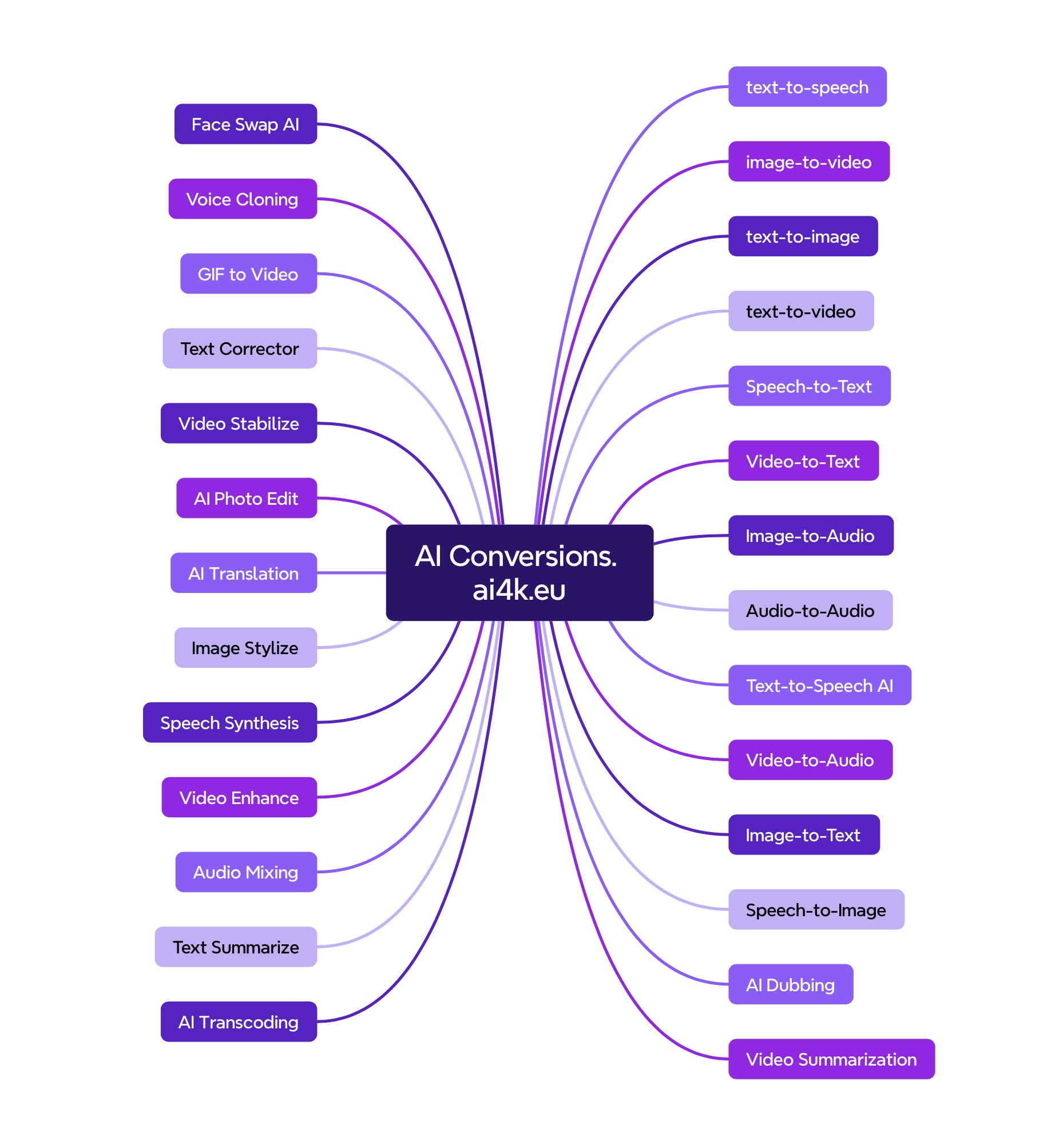

Artificial intelligence and machine learning have made tremendous strides in the ability to understand and synthesize different forms of data. A key application of AI is in conversion tasks – taking one type of data as input and generating or translating it into another format. This enables valuable downstream uses across industries.

Some common data conversion problems that AI has helped tackle include: translating text between languages with tools like Google Translate, converting speech to written words with speech recognition, generating captions for images and videos to make them searchable and accessible, and more.

As models and techniques have advanced, the scope of conversion abilities has expanded. Now AI can perform complex cross-modal conversions like translating text descriptions into visual representations. This has opened up new creative possibilities.

In this post, we will explore some of the leading AI tools available for a variety of conversion types, including: text summarization, language translation, speech recognition, text-to-speech, image captioning, and newer areas like text-to-image generation. The goal is to provide an overview of the state-of-the-art in data conversion using artificial intelligence.