AI Creativity Comes from Human and LLM Interaction, Not the Model Alone

When people talk about “AI creativity,” it’s easy to misunderstand what’s really happening. Large Language Models (LLMs) like ChatGPT or Claude do not create ideas on their own. They don’t invent, imagine, or innovate the way humans do. They generate responses by recognizing patterns in their training data, shaped and directed by the human’s prompts and ongoing interaction.

The real creativity happens in the interaction between a human and the model.

A person brings intent, context, judgment, and goals. The model brings structure, speed, and synthesis. Together, this collaboration can produce something new: a fresh idea, a redefined concept, a sharper insight.

For example, a business owner might ask a model to help write about a trend they’re noticing in their market. The AI helps organize it, compare it to other data, or present it in a compelling way. But the seed of that idea came from the human.

That’s why originality and attribution matter. If AI-generated content reflects human thinking and experience, it deserves recognition. Not because the model is creative, but because it helped someone express something in a way that might not have been possible before.

So in reality, the “creative” output of an LLM is the result of guided collaboration, one where the human remains the source of purpose and originality.

Is AI-Generated Content Penalized?

Not directly? Google has made it clear (at least publicly) that what matters is usefulness, not whether content was written by a human or AI. What gets penalized is low-quality, spammy, or automated content with no real purpose.

“AI isn’t bad for SEO. What matters is quality and relevance.”

Google Search Central, 2024

Who knows if Google is directly penalizing AI-generated content, especially content produced by models other than Gemini? Given the recent court cases, their ethical track record is already questionable.

What is clear, however, is that the current SEO collapse is largely driven by Google inserting its own AI-generated answers directly into search results, often at the expense of organic content.

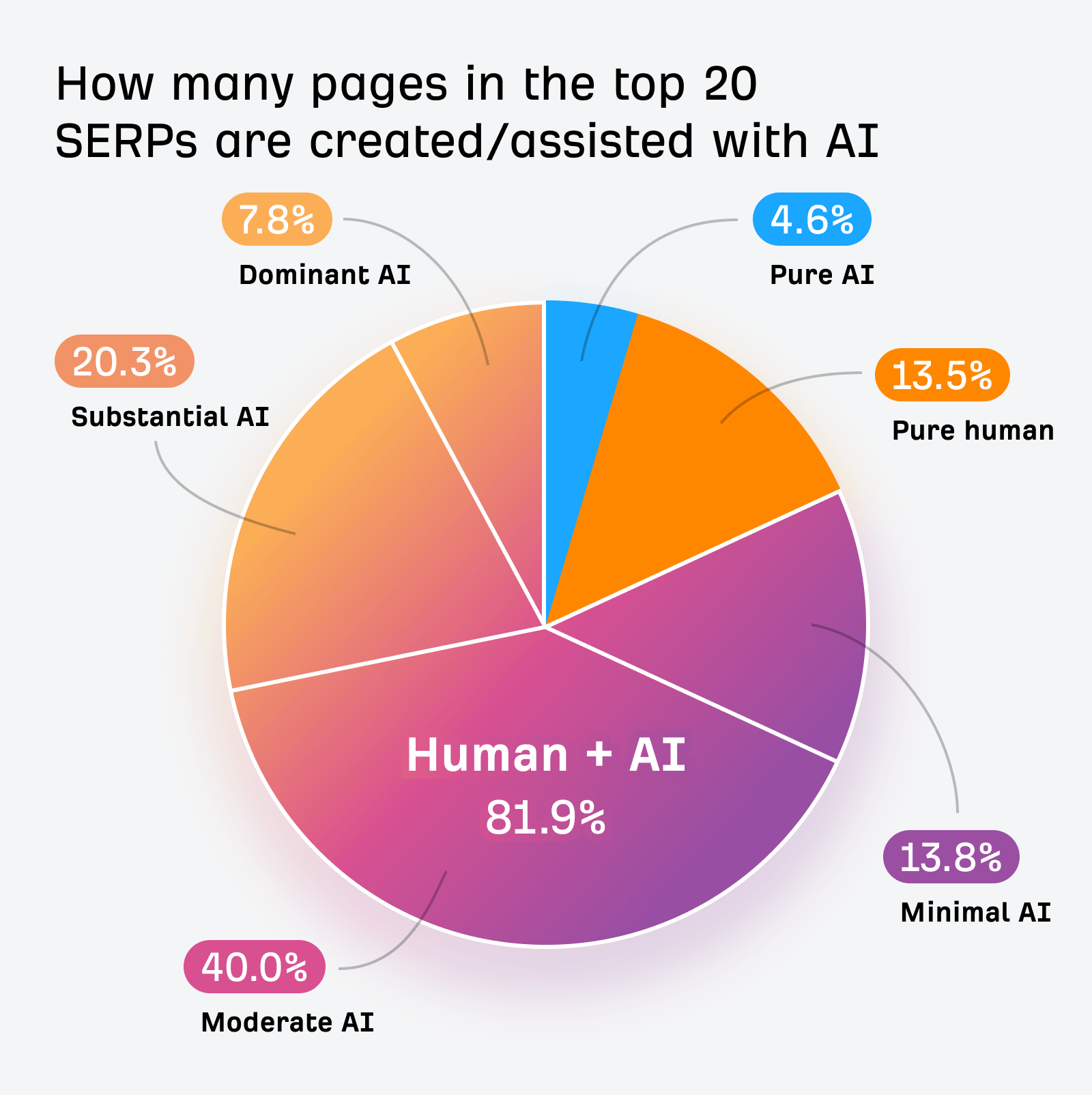

Key Stats for 2025

- 71% of companies already use generative AI for content creation (HubSpot AI Trends 2025)

- Blogs that refresh posts with AI see a 32% boost in organic traffic (Ahrefs)

- Articles optimized with AI-driven copy have a 23% higher CTR than traditional content

- By 2026, 80% of digital content will be AI-generated or AI-assisted (Gartner)

Why Google Might Resist AI Content

Ever since Google began indexing the web and requiring website owners to do SEO and follow its guidelines, often aggressively enforced through updates that felt like resets to strip away ranking advantages and push sites into paying for ads on the SERP, there has been growing frustration.

Then one day, under pressure from OpenAI and the disruption caused by ChatGPT, Google seemed to throw its hands up and say: “You know what? Fuck it.” Enter Google AI Overviews.

Google Might Resist AI Content, because it directly threatens their business model:

- Fewer ad clicks: If AI gives a good answer, users do not need to click paid ads

- Fewer searches: Tools like ChatGPT or Claude answer questions directly

- Less control of traffic: If users stay inside an AI tool, Google loses influence

This has led Google to develop features like AI Overviews, direct answers in the search results, while at the same time discouraging some types of AI-generated content unless it follows strict guidelines.